Consistent hashing is a special kind of hashing such that when a hash table is resized and consistent hashing is used, only K/n keys need to be remapped on average, where K is the number of keys, and n is the number of slots. In contrast, in most traditional hash tables, a change in the number of array slots causes nearly all keys to be remapped.

Consistent hashing achieves the same goals as Rendezvous hashing (also called HRW Hashing). The two techniques use different algorithms and were devised independently and contemporaneously.

Problem with Distributed Cache

The need for consistent hashing arose from limitations experienced while running collections of caching machines - web caches, for example. If you have a collection of n cache machines then a common way of load balancing across them is to put object o in cache machine number hash(o) mod n. This works well until you add or remove cache machines (for whatever reason), for then n changes and every object is hashed to a new location. This can be catastrophic since the originating content servers are swamped with requests from the cache machines. It’s as if the cache suddenly disappeared. Which it has, in a sense. (This is why you should care - consistent hashing is needed to avoid swamping your servers!)

Consistent Hashing

Consistent hashing was first described in a paper, Consistent hashing and random trees: Distributed caching protocols for relieving hot spots on the World Wide Web (1997) by David Karger et al. It is used in distributed storage systems like Amazon Dynamo, memcached, Project Voldemort and Riak.

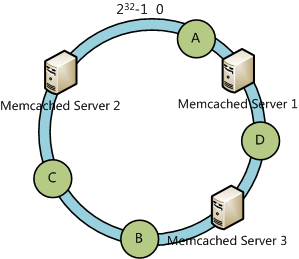

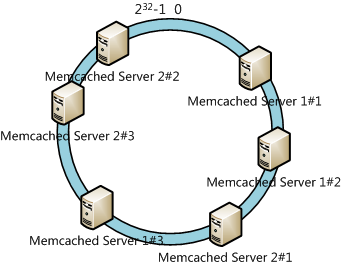

It’s assumed that the range of Hash value is [0, 232-1], and we calculate the Hash value of servers(maybe using an IP address or hostname). As the figure below.

Suppose we have 4 data objects and we calculate the Hash value of the data object and put them into the Hash ring as the figure shows below:

According to the consistent hash algorithm, the data object A will be located in Server 1, the data object C will be located in Server 3 and the data object B, and C will be located in Server 2.

Error Tolerance and Scalability

In this section, we are going to talk about the error tolerance and scalability in a consistent hashing algorithm.

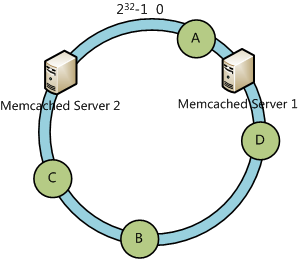

Suppose that Server 3 is down, as the figure shows below:

We’ll find that the data object A, B, and C are still available. At this time, the data object D will be located on Server 2.

In the general hashing algorithm, all locations of data objects will be changed. However, only partial data objects will be relocated in a consistent hashing algorithm.

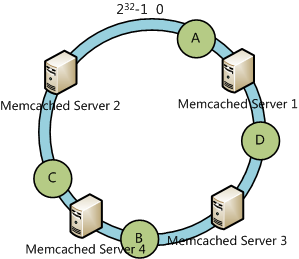

OK, what if add a server to the hash ring?

We’ll find that only the data object B is relocated to Server 4.

Virtual Nodes

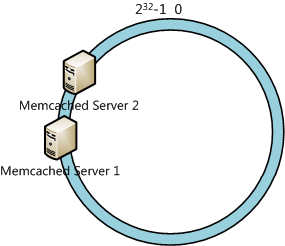

It seems that the algorithm works well. However, if the size of the intervals assigned to each cache is pretty hit-and-miss. Since it is essentially random it is possible to have a very non-uniform distribution of objects between caches. The solution to this problem is to introduce the idea of “virtual nodes”, which are replicas of cache points in the circle. So whenever we add a cache we create a number of points in the circle for it.

It’s obvious that Server 1 in the figure above will store most data objects. The performance of the hash ring will be decreased. To solve this problem, we will use virtual nodes. We can add serial numbers to the IP address or hostname of the servers. For the case mentioned above, we create 3 virtual nodes for each server: Memcached Server 1#1, Memcached Server 1#2, Memcached Server 1#3, etc. And we will get 6 virtual nodes.

Actually the data objects located in Memcached Server 1#1, Memcached Server 1#2, and Memcached Server 1#3 will all be located in Memcached Server 1. And it solved the non-uniform distribution of objects between caches.

Usage

So how can you use consistent hashing? You are most likely to meet it in a library, rather than having to code it yourself. For example, as mentioned above, Memcached, a distributed memory object caching system, now has clients that support consistent hashing. Last.fm’s ketama by Richard Jones was the first, and there is now a Java implementation by Dustin Sallings (which inspired my simplified demonstration implementation above). It is interesting to note that it is only the client that needs to implement the consistent hashing algorithm - the Memcached server is unchanged. Other systems that employ consistent hashing include Chord, which is a distributed hash table implementation, and Amazon’s Dynamo, which is a key-value store (not available outside Amazon).

The Disqus comment system is loading ...

If the message does not appear, please check your Disqus configuration.